Deep Residual Adaptive Neural Network Based Feature Extraction for Cognitive Computing with Multimodal Sentiment Sensing and Emotion Recognition Process

Main Article Content

Abstract

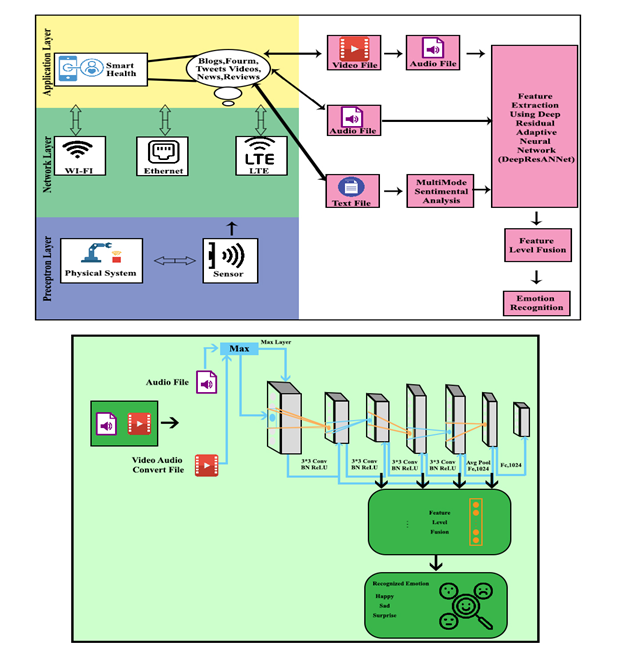

For the healthcare framework, automatic recognition of patients’ emotions is considered to be a good facilitator. Feedback about the status of patients and satisfaction levels can be provided automatically to the stakeholders of the healthcare industry. Multimodal sentiment analysis of human is considered as the attractive and hot topic of research in artificial intelligence (AI) and is the much finer classification issue which differs from other classification issues. In cognitive science, as emotional processing procedure has inspired more, the abilities of both binary and multi-classification tasks are enhanced by splitting complex issues to simpler ones which can be handled more easily. This article proposes an automated audio-visual emotional recognition model for a healthcare industry. The model uses Deep Residual Adaptive Neural Network (DeepResANNet) for feature extraction where the scores are computed based on the differences between feature and class values of adjacent instances. Based on the output of feature extraction, positive and negative sub-nets are trained separately by the fusion module thereby improving accuracy. The proposed method is extensively evaluated using eNTERFACE’05, BAUM-2 and MOSI databases by comparing with three standard methods in terms of various parameters. As a result, DeepResANNet method achieves 97.9% of accuracy, 51.5% of RMSE, 42.5% of RAE and 44.9%of MAE in 78.9sec for eNTERFACE’05 dataset. For BAUM-2 dataset, this model achieves 94.5% of accuracy, 46.9% of RMSE, 42.9%of RAE and 30.2% MAE in 78.9 sec. By utilizing MOSI dataset, this model achieves 82.9% of accuracy, 51.2% of RMSE, 40.1% of RAE and 37.6% of MAE in 69.2sec. By analysing all these three databases, eNTERFACE’05 is best in terms of accuracy achieving 97.9%. BAUM-2 is best in terms of error rate as it achieved 30.2 % of MAE and 46.9% of RMSE. Finally MOSI is best in terms of RAE and minimal response time by achieving 40.1% of RAE in 69.2 sec.

Article Details

How to Cite

Arora, G. ., Sabharwal, M. ., Kapila, P. ., Paikaray, D. ., Vekariya, V. ., & T, N. . (2022). Deep Residual Adaptive Neural Network Based Feature Extraction for Cognitive Computing with Multimodal Sentiment Sensing and Emotion Recognition Process. International Journal of Communication Networks and Information Security (IJCNIS), 14(2), 189–202. https://doi.org/10.17762/ijcnis.v14i2.5507

Issue

Section

Research Articles

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.